As Communications Service Providers (CSPs) worldwide scale up the deployments of their 5G networks, they face strong pressure to optimize their Return on Investment (RoI), given the massive expenses they already incurred to acquire spectrum as well as the ongoing costs of infrastructure rollouts.

OVS mirror offload – monitoring without impacting performance

In a recent blog, I talked about the performance improvement that can be achieved running VXLAN using HW offload. In this blog I will be discussing another important feature that we have been working on in the Napatech OVS offload initiative: mirror offload.

Rumor has it that turning on OVS mirror has a great performance impact, but I have not previously experimented with it. In continuation of the OVS HW offload, I will therefore now move on to explore the performance gains that can be achieved by offloading traffic mirroring.

Like standard OVS+DPDK, Napatech supports forwarding of mirror traffic to virtual or physical ports on a bridge. In addition, Napatech can deliver the mirror to a high speed NTAPI stream (Napatech API, a proprietary API widely used in zero-copy, zero packet-loss applications). The NTAPI stream can be used by a host application to monitor the mirrored traffic at very low CPU overhead.

OVS mirror test cases

In order to test OVS mirroring I need to settle on a test setup. I want to test the following items:

- Establish a baseline for what a VM can do

This is needed to determine if activating mirror affects the VM. The baseline of interest is- RX only VM capability – useful to determine what the mirror recipient can expect to receive

- Forwarding VM capability – useful to determine what an external mirror recipient can expect to receive

- Standard OVS+DPDK with mirror to

- Virtual port – recipient is a VM on the same server

- Physical port – recipient is the external traffic generator

- Napatech full offload additions to OVS+DPDK with mirror to

- Virtual port – recipient is a VM on the same server

- Physical port – recipient is the external traffic generator

- Host TAP – recipient is an application (using Napatech API) running on the host

Note 3.c applies to Napatech, I am not even sure that this is possible with standard OVS+DPDK, maybe someone can enlighten me?

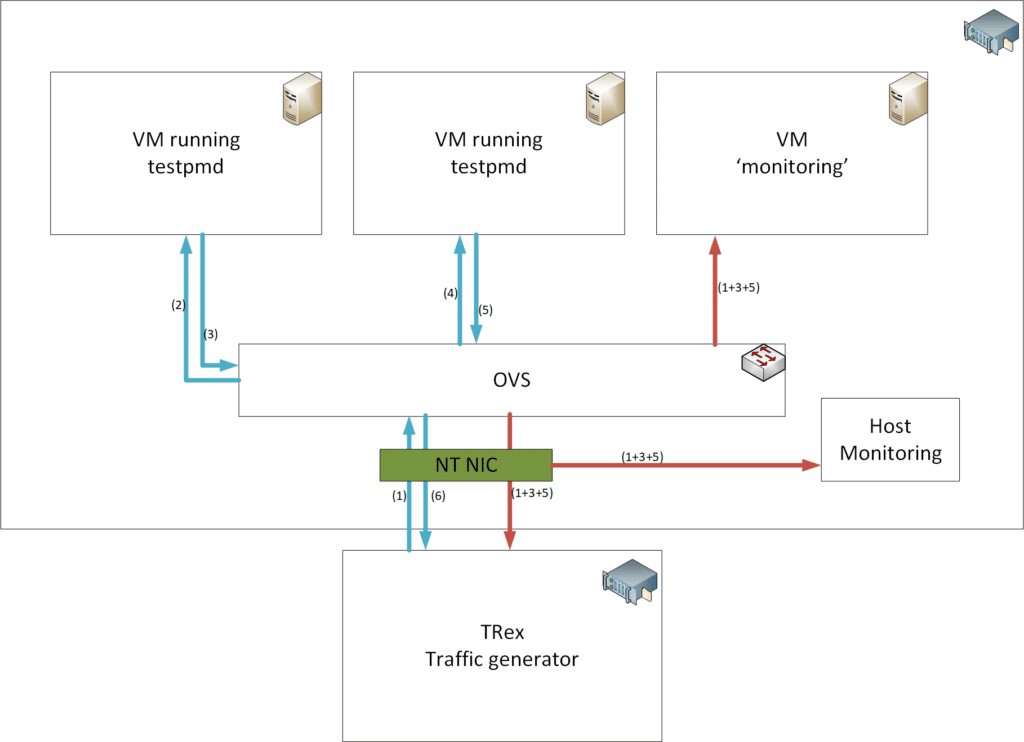

Test setup

I use a setup consisting of two servers, Dell R730 and ASUS Z9PE-D8 WS, each with a NT200A01 configured to run 40Gbps instead of 100Gbps.

| Dell R730 | ASUS Z9PE-D8 WS | |

| CPU | Intel(R) Xeon(R) CPU E5-2690 v3 @ 2.60GHz | Intel(R) Xeon(R) CPU E5-2650 0 @ 2.00GHz |

| CPU sockets | 2 | 2 |

| NUMA nodes | 12 per socket | 8 per socket |

| RAM | 64GB | 64GB |

| NIC | NT200A01 | NT200A01 |

The Dell R730 will be running the OVS+DPDK environment and the ASUS the TRex traffic generator.

The OVS+DPDK will be configured to have the following topology in the different tests

| Topology | Tests |

| 1 physical + 1 virtual port | 1a, 1b |

| 1 physical + 3 virtual ports | 2a, 3a |

| 2 physical + 2 virtual ports | 2b, 3b, 3c |

The VMs will be running the following programs

- ‘monitoring – a very simple DPDK application based on the ‘skeleton’ example but with the addition that it shows throughput on stdout every second

- ‘testpmd’ – a standard DPDK test application. This application can be configured to forward packets to specific MAC addresses, so it is very useful when creating the chain shown in the picture above.

OVS+DPDK will be running in standard MAC learning mode, it will learn which port has which MAC address and automatically create forwarding rules based on this behavior. The traffic generator and the VMs are configured like this:

| MAC address | Command to forward to next recipient | |

| TRex | 00:00:00:00:00:10 | Set destination MAC to 00:00:00:00:00:01 |

| VM1 | 00:00:00:00:00:01 | ./testpmd -c 3 -n 4 — -i –eth-peer=0,00:00:00:00:00:02 –nb-cores=1 –forward-mode=mac |

| VM2 | 00:00:00:00:00:02 | ./testpmd -c 3 -n 4 — -i –eth-peer=0,00:00:00:00:00:10 –nb-cores=1 –forward-mode=mac |

Tests

Finding the baseline

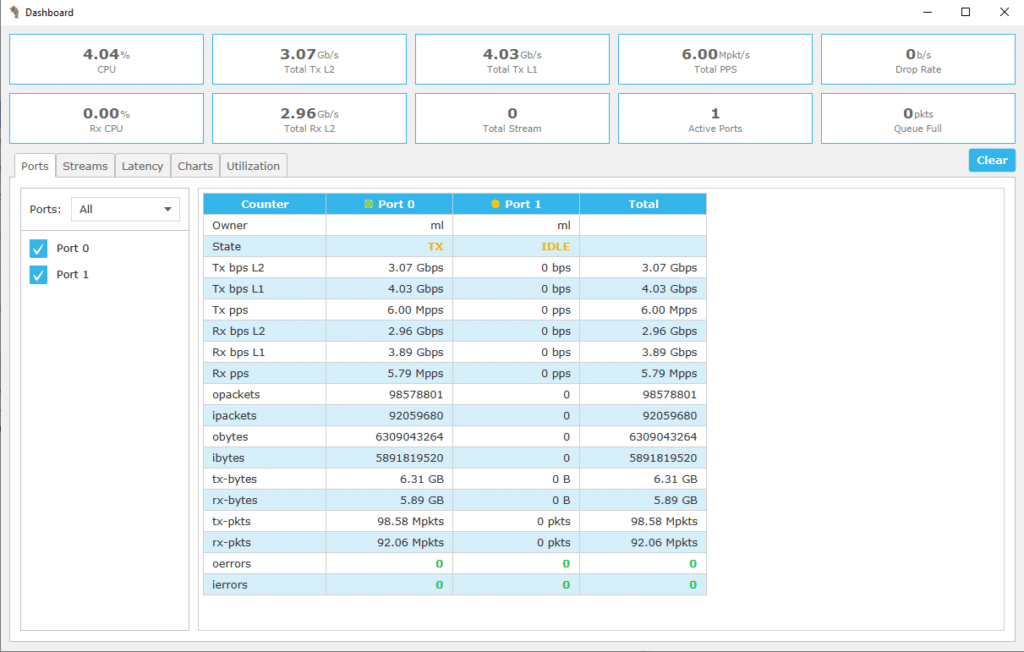

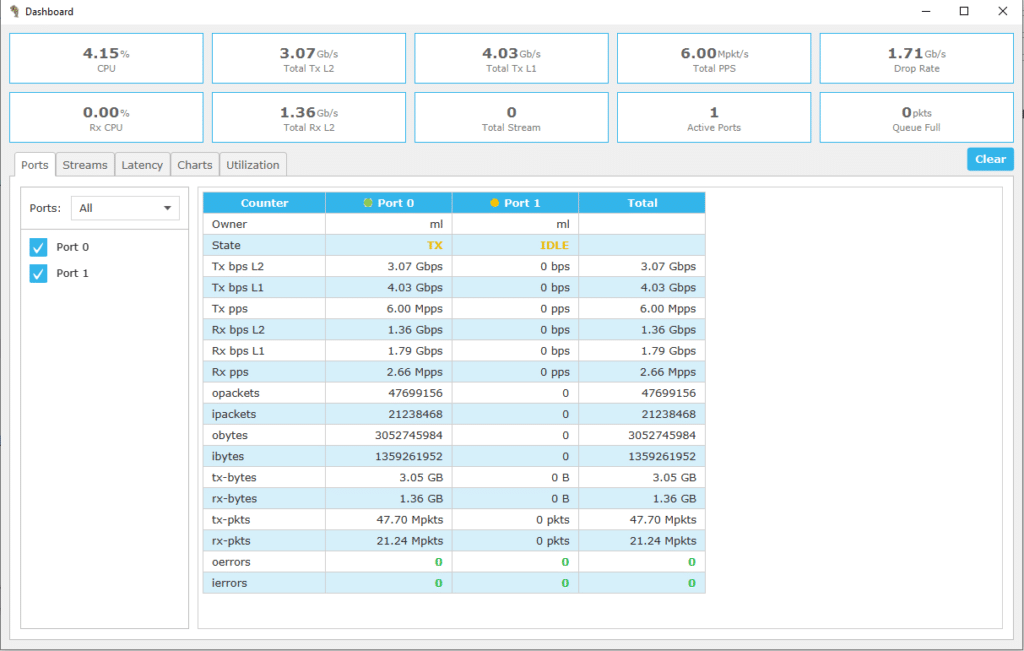

The baseline tests were achieved by configuring TRex to generate 64B packets at 40Gbps and monitor the output in either the ‘monitor’ app or the TRex statistics. The baseline results showed the following:

| RX only | Forwarding | |

| OVS+DPDK | 7.9Mpps | 7.6Mpps |

| OVS+DPDK full offload | 14.7Mpps | 6.5Mpps |

./monitor … Core 0 receiving packets. [Ctrl+C to quit] Packets: 7931596 Throughput: 3807.166 Mbps

./monitor … Core 0 receiving packets. [Ctrl+C to quit] Packets: 14735328 Throughput: 7072.957 Mbps

A common baseline for the two test scenarios will be to forward 6Mpps (64B packets) from TRex. This will allow each VM to handle zero loss forwarding and remain within the capabilities of the physical mirror port running 40Gbps because it will need to transmit the forwarded traffic 3x times.

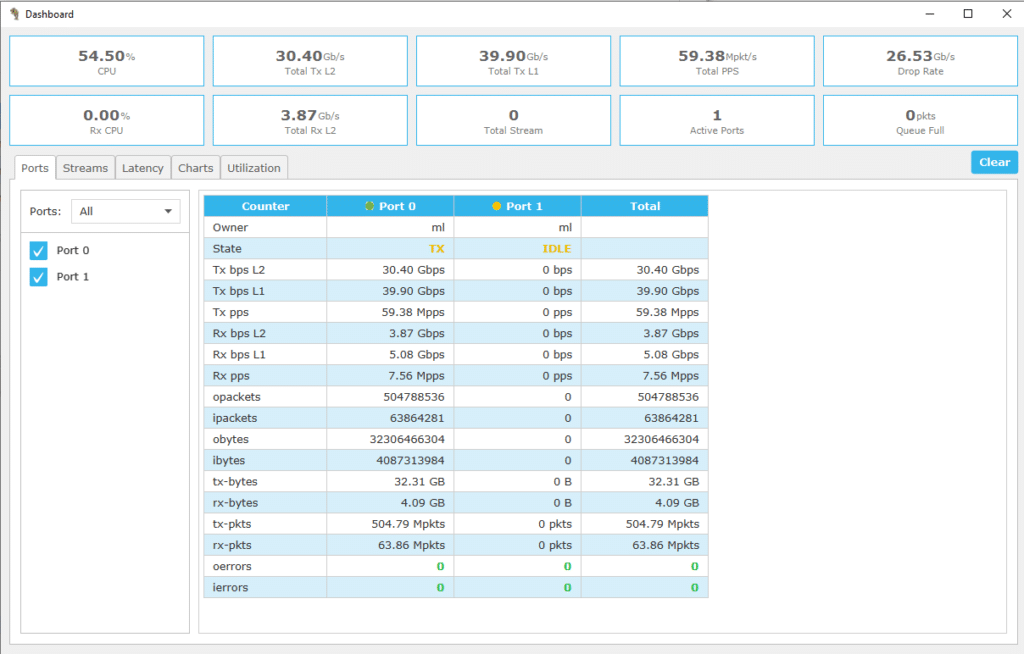

Mirror to virtual port

The virtual port mirror is created using the following command for OVS+DPDK

ovs-vsctl add-port br0 dpdkvp4 -- set Interface dpdkvp4 type=dpdkvhostuserclient options:vhost-server-path="/usr/local/var/run/stdvio4" -- --id=@p get port dpdkvp4 -- --id=@m create mirror name=m0 select-all=true output-port=@p -- set bridge br0 mirrors=@m

and like this for OVS+DPDK full offload

ovs-vsctl add-port br0 dpdkvp4 -- set interface dpdkvp4 type=dpdk options:dpdk-devargs=eth_ntvp4 -- --id=@p get port dpdkvp4 -- --id=@m create mirror name=m0 select-all=true output-port=@p -- set bridge br0 mirrors=@m

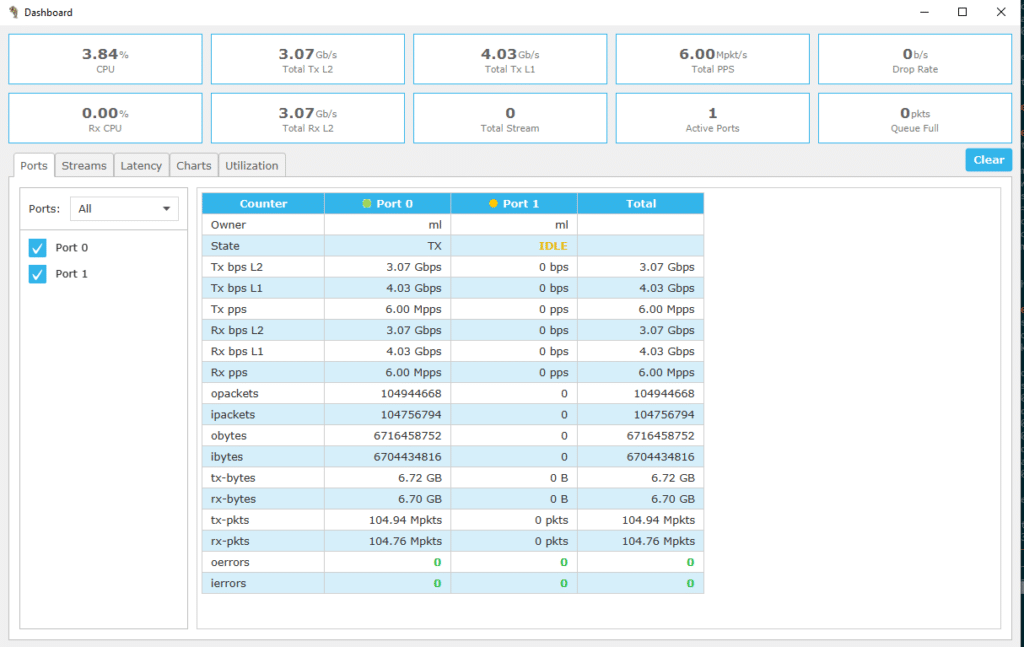

The ‘monitor’ app is used in VM3 to determine the throughput of the mirror port, but the RX statistics of TRex (port 0) is also observed to see if activating the mirror affects the forwarding performance.

| Monitoring VM (Expect 18Mpps) | TRex RX (Expect 6Mpps) | |

| OVS+DPDK | 7.8Mpps | 2.7Mpps |

| OVS+DPDK full offload | 14.9 Mpps | 6.0Mpps |

The native OVS+DPDK really suffer in this test, both the mirror VM and the forwarding path are greatly reduced in performance.

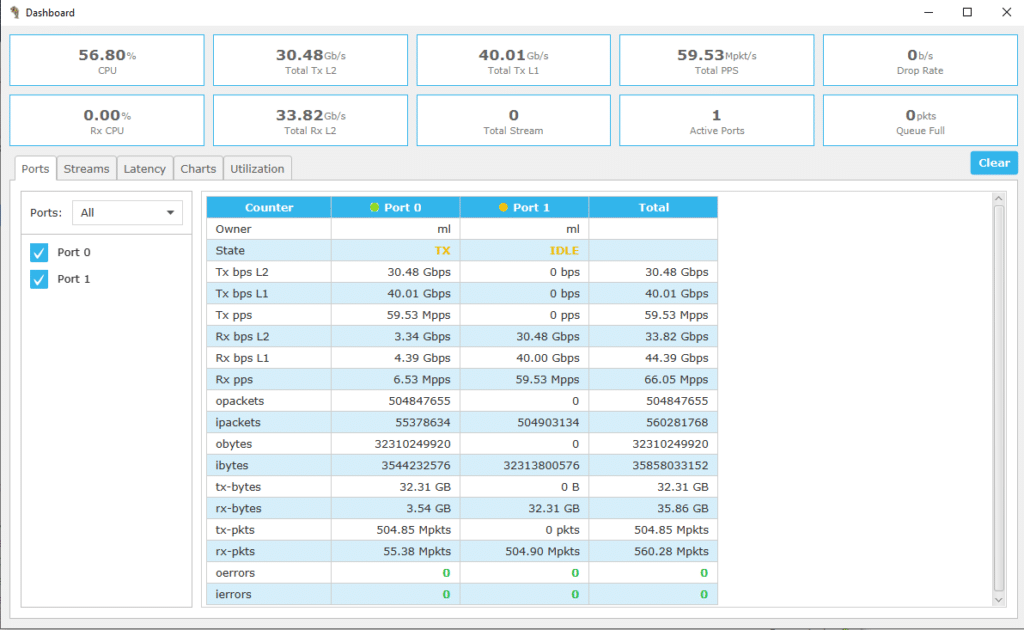

Mirror to physical port

The physical port mirror is created using the following command and it is the same for both OVS+DPDK and the fully offloaded version:

ovs-vsctl add-port br0 dpdk1 -- set interface dpdk1 type=dpdk options:dpdk-devargs=class=eth,mac=00:0D:E9:05:AA:64 -- --id=@p get port dpdk1 -- --id=@m create mirror name=m0 select-all=true output-port=@p -- set bridge br0 mirrors=@m

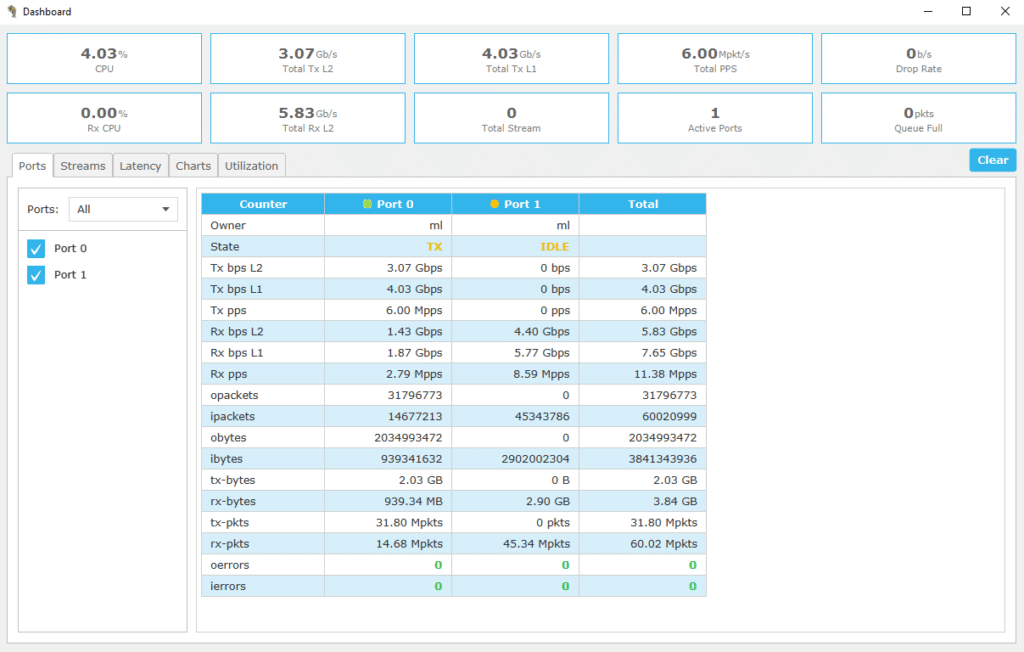

The TRex statistics on port 1 are used to determine the throughput of the mirror port. The TRex port 0 shows the forwarding performance.

| HW mirror (Expect 18Mpps) | TRex RX (Expect 6Mpps) | |

| OVS+DPDK | 8.6Mpps | 2.8Mpps |

| OVS+DPDK full offload | 18.0 Mpps | 6.0Mpps |

In this test the native OVS+DPDK also suffer performance degradation both on the mirror and forwarding path.

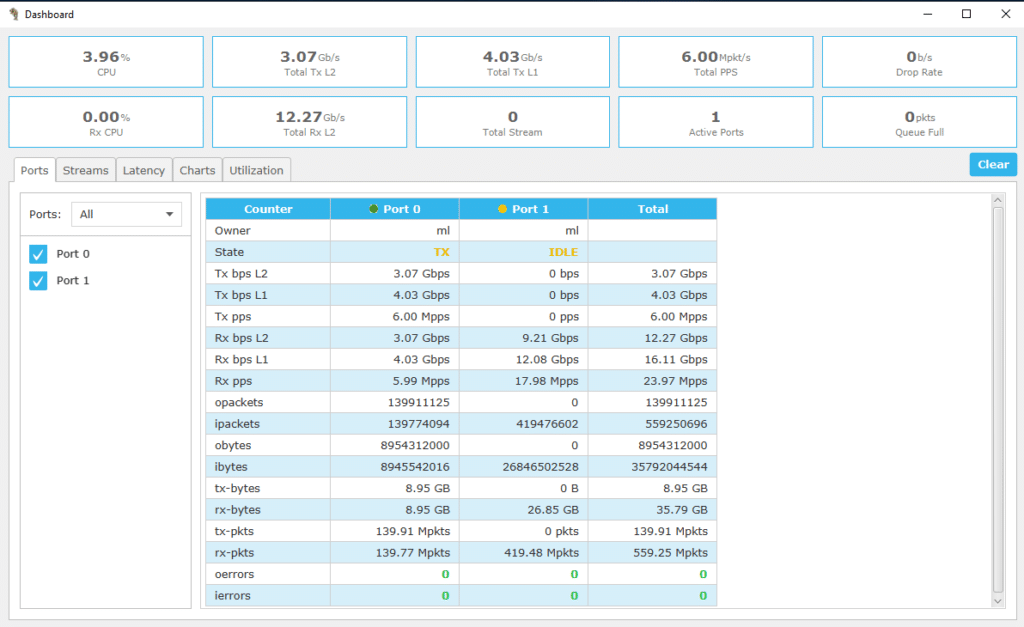

Mirror to ‘host tap’

The mirror to a host application uses the same setup as the physical mirror port.

| HW mirror (Expect 18Mpps) | TRex RX (Expect 6Mpps) | |

| OVS+DPDK full offload | 18.0 Mpps | 6.0Mpps |

root@dell730_ml:~/andromeda# /opt/napatech3/bin/capture -s 130 -w capture (v. 0.4.0.0-caffee) … Throughput: 17976315 pkts/s, 11312.309 Mbps

Evaluation

The test results clearly show the potential of offloading the mirror functionality. I performed another test after all the results had been gathered, as I had not considered the forwarding performance from a VM to another VM, as part of the baseline. The results here were interesting because it turned out that the forwarding between two VMs in native OVS+DPDK affect the overall forwarding performance. I was not able to get all 6Mpps forwarded in the case of native OVS+DPDK whereas the fully offloaded version showed no issues.

Future work

The full offload of OVS shows great potential and we can take it even further. The Napatech mirror solution can also provide packet filtering on the mirrored traffic whereby the actual traffic going out of the mirror port is reduced enabling the monitoring application to only receive what it has interest in seeing. The filtering was not made available at the time of this blog, but it is a rather quick thing to do, so I might come back to that at a later point.