As Communications Service Providers (CSPs) worldwide scale up the deployments of their 5G networks, they face strong pressure to optimize their Return on Investment (RoI), given the massive expenses they already incurred to acquire spectrum as well as the ongoing costs of infrastructure rollouts.

How To Make Server-based Networking Solutions Reliable

With the internet being the backbone of modern society, we are extremely dependent on the availability and quality of the internet services provided. The need for availability and quality are addressed in the different layers of the internet hierarchy, core, distribution and access, through redundant data path topologies, protection and quality of the service schemes.

Standard and proprietary servers are increasingly being deployed in network solutions. Nowhere else can one see the latest high performance CPU architectures made available for the use cases at lower costs. This fact is hard to ignore for CPU intensive networking use cases, and have over the last decade caused many networking applications to migrate to server-based platforms. The servers typically facilitate integration with the networking adapters through the latest generation PCIe interface. The networking adapter comes in different implementations, ranging from low-performance standard Network Interface Cards (NICs), to high performance FPGA or NPU based platforms. In both cases, the provider of the end product is left with the integration burden of the PCIe based networking adapter and the server. The high-performance FPGA based networking adapters bring obvious benefits to the table for the demanding use case, as it is able to offload the CPU handling upgradeable features at real time, high bandwidths and with predictable performance/timing. The market offers several FPGA based solutions seemingly identical from a packet-processing feature point of view. There are, however, a lot of details in designing networking adapters that, unattended, will separate the product from successful and reliable server deployment throughout the lifecycle of the end product. I will address a few of the details below.

SHOCK AND VIBRATION:

The typical PCIe product is delivered for integration to the end user in its original packaging, whereas the networking adapter in a server-based networking product will typically be delivered to the end user preinstalled in the server. This difference has a huge impact on the shock and vibration robustness requirements for the networking adapter. Both shock and vibration robustness will be challenged during the transportation phase to the end customer. The packaged end product is susceptible to rough handling by the courier on its way to the end user. Vibration robustness is typically challenged during air transportation, where the continuous vibration in the plane, can have a fatal impact on the connectivity of the FPGA based networking adapter, if not designed and validated to withstand this exposure.

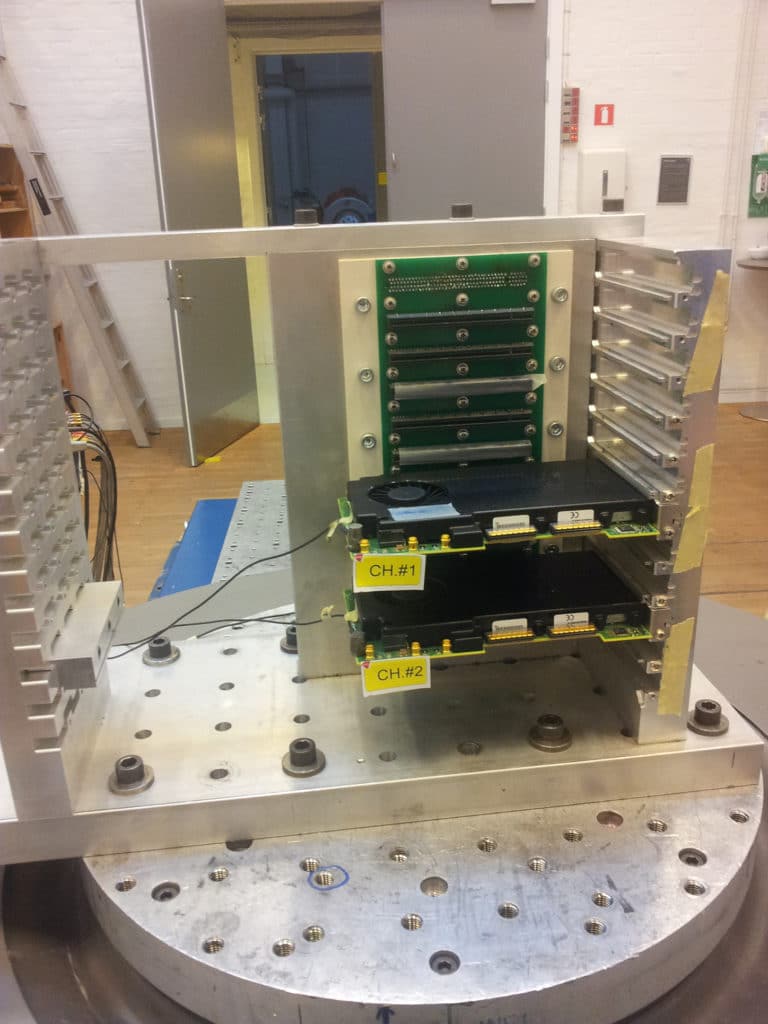

Figure 1: Shock & vibration testing, black wires connects to local accelometers on the DUT’s

MARGIN OPTIMIZATION ON EXTERNAL INTERFACES:

PCIe interface

One consequence of separating the networking frontend for use in networking adapter, is the required PCIe interface for data path hook-up to the server memory. The integrator does not want to spend his time getting a trivial PCIe interface link to work reliably. To guarantee “plug and play” on the PCIe level, the provider of the FPGA based networking adapter needs to select an FPGA technology with best-in-class transceiver technology, optimize the PCB design connecting the FPGA transceiver to the PCIe edge connector, and document the resulting performance through participation in PCISIG compliance workshops.This results in the right to use the “PCISIG” trademark on the product and product documentation.

Front ports:

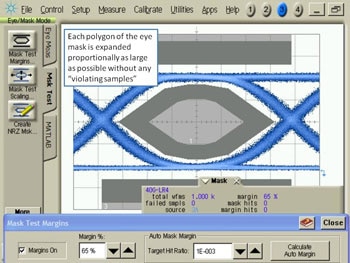

A typical use case for networking adapters is packet capturing on the Rx/Tx data path between two network elements. In this use case it is vital for the application that the product has margin optimized receiver capabilities, as it doesn’t have a say in terms of the potential need for packet retransmission in case of bit errors. As with the PCIe use case the solution to this issue is carefully selected FPGA transceiver technology and low loss PCB signaling. Similarly, the Tx performance of the networking adapter is defined by the selected FPGA transceiver technology and through careful optimization of transceiver settings.

Figure 2: Example of front port eye mask margin optimization

THERMAL PERFORMANCE

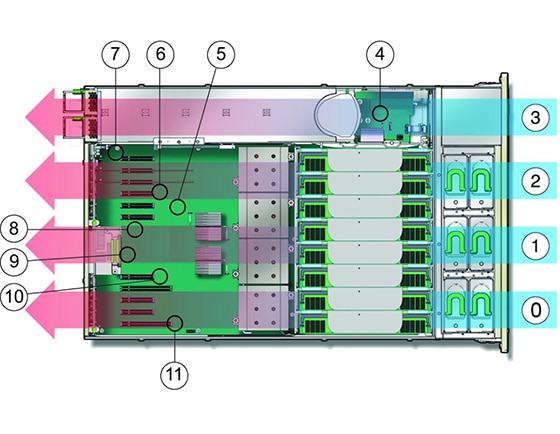

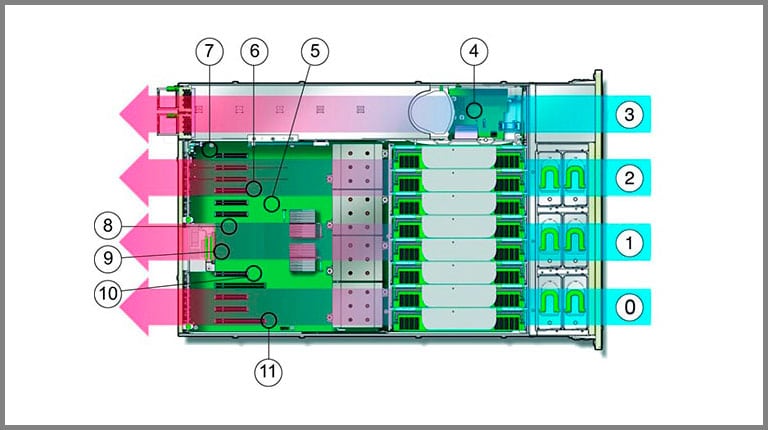

Modern servers provide a controlled thermal environment for the motherboard functionality as well as the add-in cards. Whereas previous server generations were equipped with free running fans, providing plenty of cooling, new generations deploy fan speed control based on feedback from internal thermal monitoring points. A high-performance FPGA based networking adapter is by nature a high power consuming product. In order to guarantee successful integration and secure high margin operation in any slot, in any modern server, the thermal design of an FPGA based networking adapter must take full control of the cooling of internal hot spots, and guarantee operation solely based on add-in card ambient temperature, without requirements for airflow.

Figure 3: Modern server, numbers 4 to 11 represents thermal feedback points

EVENT MONITORING AND HANDLING

In order to successfully replace the older, typically blade-based proprietary system with a server-based solution, networking adapters need to monitor key system metrics. This enables event handling during runtime and storing log info for effective root cause analysis in case of malfunctioning. Some of the relevant metrics are device and board temperatures, voltages and currents, interface and protocol events.

The integration challenges in the server have a huge impact on the design, validation, production and testing of the Napatech FPGA based network accelerators. We know that we are only successful if our customer is successful with the integration, which is why we have a strong focus on the above implementation details and methodologies.

With all integrations issues addressed, can you afford to not have a Napatech FPGA based accelerator in your next server-based product?