DATA CENTER

Coupled with Napatech software, the F2070X is the perfect solution for network, storage and security offload and acceleration. It enables virtualized cloud, cloud-native or bare-metal server virtualization with tenant isolation.

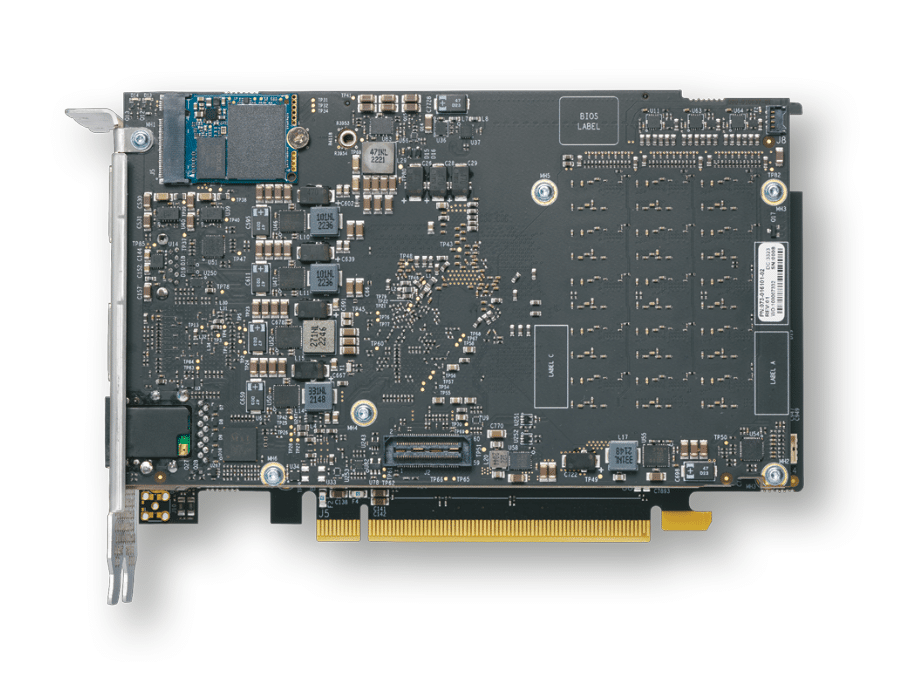

Powerful Intel®-based Infrastructure Processing Unit (IPU)

The Napatech F2070X Infrastructure Processing Unit (IPU) is a 2x100Gbe PCIe card with an Intel® Agilex® AGFC023 FPGA and an Intel® Xeon® D SoC. The unique combination of FPGA and full-fledged Xeon CPU on a PCI card allows for unique offload capabilities. Coupled with Napatech software, the F2070X is the perfect solution for network, storage and security offload and acceleration. It enables virtualized cloud, cloud-native or bare-metal server virtualization with tenant isolation.

Customization on demand

The F2070X uniquely offers both programmable hardware and software, to tailor the IPU to the most demanding and specific needs in your network, and to modify and enhance its capabilities over the life of the deployment. It is based on the Intel Application Stack Acceleration Framework (ASAF) that supports the integration of software and IP from Intel, Napatech, 3rd parties and homegrown solutions. This one of a kind architecture enables hardware performance and the speed of software innovation.

Scalable platform

The Napatech F2070X comes in a standard configuration, and includes support for several combinations of Intel® FPGAs, Xeon® D processors, and memory. This enables tailored platform configurations matching requirements for specific use cases.

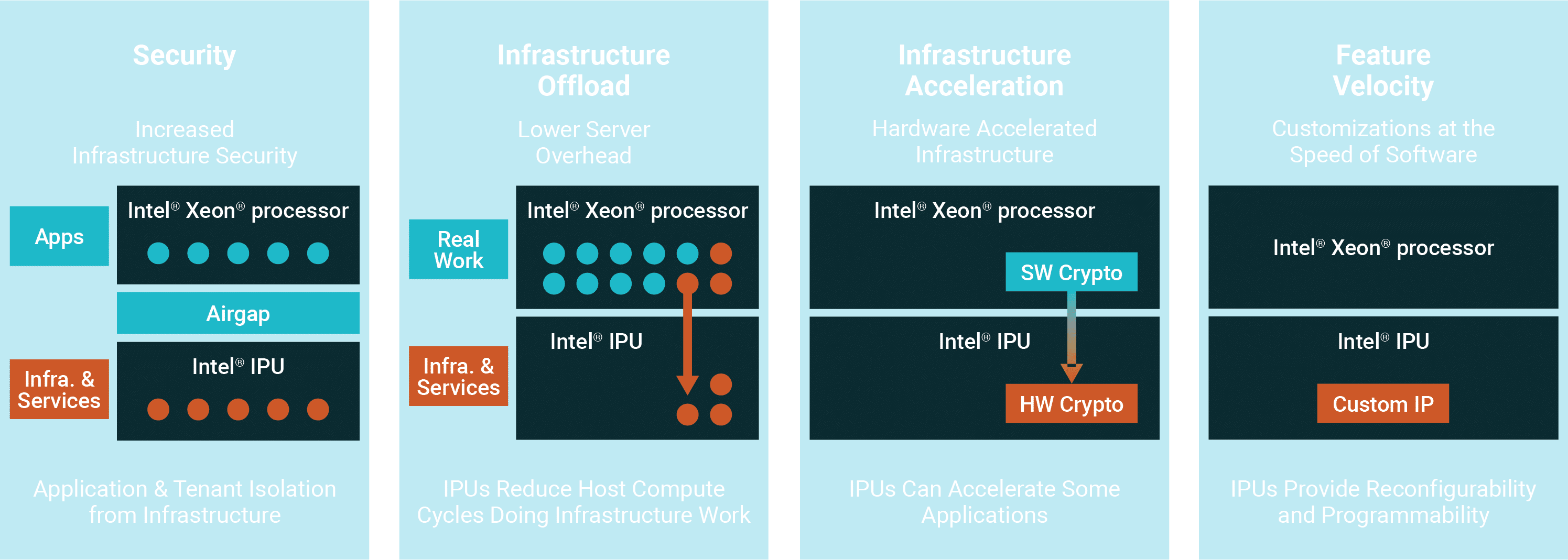

This IPU-based architecture has several major benefits

- First, the strong separation of infrastructure functions and tenant workload allows tenants to take full control of the CPU.

- Guest can fully control the CPU with their SW, while CSP maintains control of the infrastructure and Root of Trust

- Second, the cloud operator can offload infrastructure tasks to the IPU. This helps maximize revenue.

- Accelerators help process these task efficiently. Minimize latency and jitter and maximize revenue from CPU

- And third, IPU’s allow for a fully diskless Server Architecture in the cloud data center.

- Simplifies data center architecture while adding flexibility for the CSP

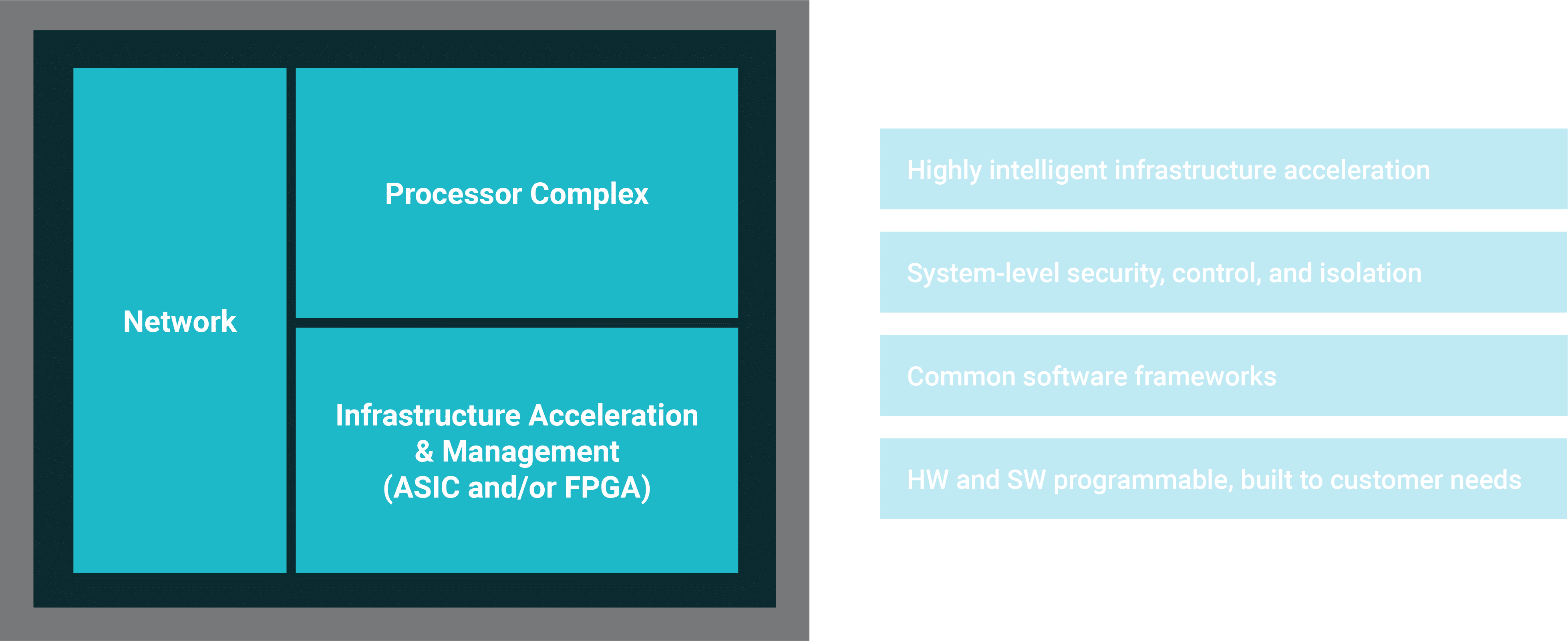

Infrastructure Processing Unit

An IPU offers the ability to:

- Accelerate infrastructure functions, including storage virtualization, network virtualization and security with dedicated protocol accelerators.

- Free up CPU cores by shifting storage and network virtualization functions that were previously done in software on the CPU to the IPU.

- Improve data center utilization by allowing for flexible workload placement.

Enable cloud service providers to customize infrastructure function deployments at the speed of software.

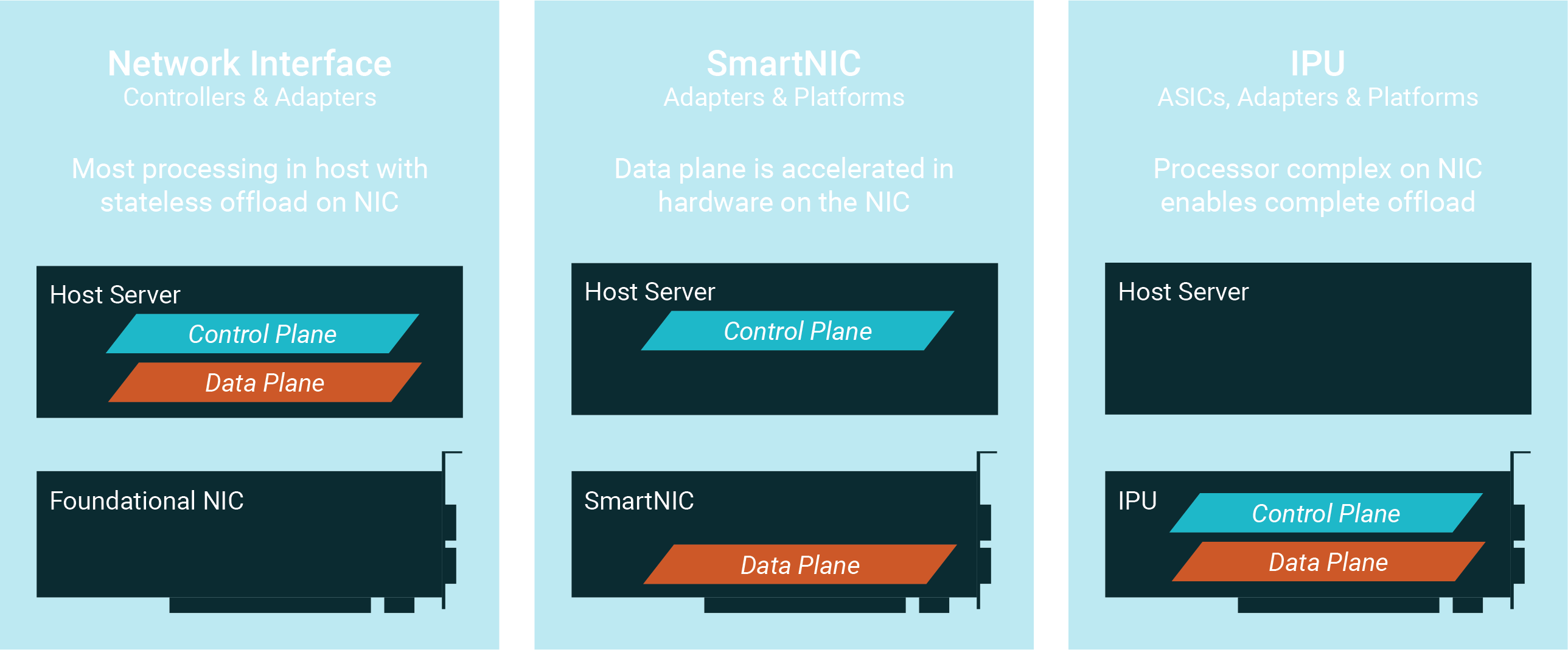

IPUs are architected from the ground up specifically to be an infrastructure control point. Intel® IPUs provide the greatest level of security in a bare-metal hosted environment.

- Research from Google and Facebook has shown 22%[1] to 80%[2] of CPU cycles can be consumed by microservices communication overhead.

- Some of the key motivations to using an IPUs are:

- Accelerate networking infrastructure by moving some of the workload onto the IPU this reduces the total server overhead.

- Reference Workloads are localized on the IPU. Performance is increased through:

- Removing some of the PCIe latency between the host and the IPU.

- Offloading SW functions to HW – For reference only: ZSTD Compression in HW can achieve L9 100Gbs @ 64KB packets and a compression ratio of 32.6%, a similar SW solution would consume 369 cores @ 1.8GHz achieve L7 @ 32.7% compression ratio.

- Application-level HW optimization can be made in the FPGA and IPU.

- IPU are use reconfigurable and highly programmable allowing for customization of particular features and development and deployment on software timescales

- This IPU-based architecture has some major advantages:

- First, the cloud operators can offload infrastructure tasks to the IPU. The IPU accelerators can process this workload very efficiently. This optimizes performance and the cloud operator can rent out 100% of the CPU to his guest which also helps to maximize revenue.

- The IPU allows the separation of functions so the guest can fully control the CPU. The guest can bring its own hypervisor, but the cloud is still fully in control of the infrastructure and it can sand box functions such as networking security and storage.

- The IPU can also help replace local disk storage directly connected to the server with virtual storage connected to the other network that will greatly simplify data center architecture while allowing tremendous amount of flexibility.

[1] The reference “From Profiling a warehouse-scale computer, Svilen Kanev, Juan Pablo Darago, Kim M Hazelwood, Parthasarathy Ranganathan, Tipp J Moseley, Guyeon Wei, David Michael Brooks, ISCA’15” https://research.google/pubs/pub44271.pdf — figure 4.

[2] https://research.fb.com/publications/accelerometer-understanding-acceleration-opportunities-for-data-center-overheads-at-hyperscale/

Hardware-plus-software solutions accelerate data center networking services

The Napatech F2070X IPU and Link-Storage™ Software provide a perfect solution for storage and network offload, virtualized cloud, cloud-native or bare-metal server virtualization with tenant isolation within the Intel® Infrastructure Processing Unit (IPU) ecosystem.

Link-Storage™ Software

Enterprise and cloud data centers are increasingly adopting the NVMe-oF storage technology because of the advantages it offers in terms of performance, latency, scalability, management and resource utilization. However, implementing the required storage initiator workloads on the server’s host CPU imposes significant compute overheads and limits the number of CPU cores available for running services and applications.

Napatech’s integrated hardware-plus-software solution, comprising the Link-Storage™ software stack running on the F2070X IPU, addresses this problem by offloading the storage workloads from the host CPU to the IPU while maintaining full software compatibility at the application level.

Napatech’s storage offload solution not only frees up host CPU cores which would otherwise be consumed by storage functions but also delivers significantly higher performance than a software-based implementation. This significantly reduces data center CAPEX, OPEX and energy consumption.

The Napatech solution also introduces security isolation into the system, increasing protection against cyber-attacks, which reduces the likelihood of the data center suffering security breaches and high-value customer data being compromised.

Link-Security™ Software

Within enterprise and cloud data centers, Transport Layer Security (TLS) encryption is used to ensure security, confidentiality and data integrity. It provides a secure communication channel between servers over the data center network, ensuring that the data exchanged between them remains private and tamper-proof. However, implementing the TLS protocol on the server’s host CPU imposes significant compute overheads and limits the number of CPU cores available for running services and applications.

Napatech’s integrated hardware-plus-software solution, comprising the Link-CDN™ software stack running on the F2070X IPU, addresses this problem by offloading the TLS and TCP protocols from the host CPU to an Infrastructure Processing Unit (IPU) while maintaining full software compatibility at the application level.

Napatech’s security offload solution not only frees up host CPU cores which would otherwise be consumed by security protocols but also delivers significantly higher performance than a software-based implementation. This significantly reduces data center CAPEX, OPEX and energy consumption.

The Napatech solution also introduces security isolation into the system, increasing protection against cyber-attacks, which reduces the likelihood of the data center suffering security breaches and high-value customer data being compromised.

Link-Virtualization™ Software

Operators of enterprise and cloud data centers are continually challenged to maximize the compute performance and data security available to tenant applications, while at the same time minimizing the overall CAPEX, OPEX and energy consumption of their Infrastructure-as-a-Service (IaaS) platforms. However traditional data center networking infrastructure based around standard or “foundational” Network Interface Cards (NICs) imposes constraints on both performance and security by running the networking stack on the host server CPU, as well as related services like the hypervisor.

Napatech’s integrated hardware-plus-software solution, comprising the Link-Virtualization™ software stack running on the F2070X IPU, addresses this problem by offloading the networking stack from the host CPU to an Infrastructure Processing Unit (IPU) while maintaining full software compatibility at the application level.

The solution not only frees up host CPU cores which would otherwise be consumed by networking functions but also delivers significantly higher data plane performance than software-based networking, achieving a level of performance that would otherwise require more expensive severs with higher-end CPUs. This significantly reduces data center CAPEX, OPEX and energy consumption.

The IPU-based architecture also introduces security isolation into the system, increasing protection against cyber-attacks, which reduces the likelihood of the data center suffering security breaches and high-value customer data being compromised.

Finally, offloading the infrastructure services such as the networking stack and the hypervisor to an IPU allows data center operators to achieve the deployment agility and scalability normally associated with Virtual Server Instances (VSIs), while also ensuring the cost, energy and security benefits mentioned above.

| FPGA Device and Memory |

|

| SoC Processor and Memory |

|

| PCI Express Interfaces |

|

| Front Panel Network Interfaces |

|

| Supported Compute and Memory Devices (Mount Options) |

|

| Size |

|

| Power and Cooling |

|

| Time Synchronization (Mount Options) |

|

| Board Management |

|

| CPU Operating System |

|

| Environment and Approvals |

|

| Application Stack Acceleration Framework (ASAF) |

|

| Network Offload |

|

| Storage Offload |

|

| Security Offload |

|

| Supported Hardware and Transceivers |

|

Resources and downloads

Want to learn more?

Get more information about our latest F2070X IPU to offload and accelerate data center networking services.